Share

“Beware the Ides of March.” Yes, it’s finally that time of year again: When the emperors of college basketball must watch their backs, lest the lowly bottom seeds of the tournament strike.

Matthew Osborne

Kevin Nowland

The first step to a perfect bracket is correctly choosing the first round. Unfortunately, most of us can’t predict the future. Last year, only 164 of the submitted brackets were perfect through the first round – less than 0.001 percent.

18.8 million brackets submitted.

164 are perfect after Round 1.

Here’s to overachieving. #perfectbracketwatch pic.twitter.com/TGwZNCzSnW

— ESPN Fantasy Sports (@ESPNFantasy) March 18, 2017

Many brackets are busted when a lower-seeded team upsets the favored higher seed. Since the field expanded to 64 teams in 1985, at least eight upsets occur on average each year. If you want to win your bracket pool, you better pick at least a few upsets.

We’re two math Ph.D. candidates at the Ohio State University who have a passion for data science and basketball. This year, we decided it would be fun to build a computer program that uses a mathematical approach to predict first-round upsets. If we’re right, a bracket picked using our program should perform better through the first round than the average bracket.

Fallible Humans

It’s not easy to identify which of the first-round games will result in an upset.

Say you have to decide between the No. 10 seed and the No. 7 seed. The No. 10 seed has pulled off upsets in its past three tournament appearances, once even making the Final Four. The No. 7 seed is a team that’s received little to no national coverage; the casual fan has probably never heard of them. Which would you choose?

If you chose the No. 10 seed in 2017, you would have gone with Virginia Commonwealth University over Saint Mary’s of California – and you would have been wrong. Thanks to a decision-making fallacy called recency bias, humans can be tricked into to using their most recent observations to make a decision.

Recency bias is just one type of bias that can infiltrate someone’s picking process, but there are many others. Maybe you’re biased toward your home team, or maybe you identify with a player and desperately want him or her to succeed. All of this influences your bracket in a potentially negative way. Even seasoned professionals fall into these traps.

Modeling Upsets

Machine learning can defend against these pitfalls.

In machine learning, statisticians, mathematicians and computer scientists train a machine to make predictions by letting it “learn” from past data. This approach has been used in many diverse fields, including marketing, medicine and sports.

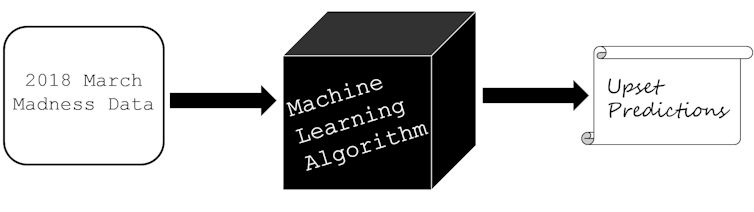

Machine learning techniques can be likened to a black box. First, you feed the algorithm past data, essentially setting the dials on the black box. Once the settings are calibrated, the algorithm can read in new data, compare it to past data and then spit out its predictions.

Matthew Osborne, CC BY-SA

In machine learning, there are a variety of black boxes available. For our March Madness project, the ones we wanted are known as classification algorithms. These help us determine whether or not a game should be classified as an upset, either by providing the probability of an upset or by explicitly classifying a game as one.

Our program uses a number of popular classification algorithms, including logistic regression, random forest models and k-nearest neighbors. Each method is like a different “brand” of the same machine; they work as differently under the hood as Fords and Toyotas, but perform the same classification job. Each algorithm, or box, has its own predictions about the probability of an upset.

We used the statistics of all 2001 to 2017 first-round teams to set the dials on our black boxes. When we tested one of our algorithms with the 2017 first-round data, it had about a 75 percent success rate. This gives us confidence that analyzing past data, rather than just trusting our gut, can lead to more accurate predictions of upsets, and thus better overall brackets.

What advantages do these boxes have over human intuition? For one, the machines can identify patterns in all of the 2001-2017 data in a matter of seconds. What’s more, since the machines rely only on data, they may be less likely to fall for human psychological biases.

That’s not to say that machine learning will give us perfect brackets. Even though the box bypasses human bias, it’s not immune to error. Results depend on past data. For example, if a No. 1 seed were to lose in the first round, our model would not likely predict it, because that has never happened before.

Additionally, machine learning algorithms work best with thousands or even millions of examples. Only 544 first-round March Madness games have been played since 2001, so our algorithms will not correctly call every upset. Echoing basketball expert Jalen Rose, our output should be used as a tool in conjunction with your expert knowledge – and luck! – to choose the correct games.

Machine Learning Madness?

We’re not the first people to apply machine learning to March Madness and we won’t be the last. In fact, machine learning techniques may soon be necessary to make your bracket competitive.

![]() You don’t need a degree in mathematics to use machine learning – although it helps us. Soon, machine learning may be more accessible than ever. Those interested can take a look at our models online. Feel free to explore our algorithms and even come up with a better approach yourself.

You don’t need a degree in mathematics to use machine learning – although it helps us. Soon, machine learning may be more accessible than ever. Those interested can take a look at our models online. Feel free to explore our algorithms and even come up with a better approach yourself.

Matthew Osborne, Ph.D Candidate in Mathematics, The Ohio State University and Kevin Nowland, Ph.D Candidate in Mathematics, The Ohio State University

This article was originally published on The Conversation. Read the original article.

Categories